I was hired onto the Hardware Platform Engineering team at eBay just as they started getting ready to deploy their next-gen 400G network that was using SONiC, an open source network operating system. The team is in charge of validating all of the hardware that runs on the eBay infrastructure, so it’s pretty important to find issues BEFORE the gear gets deployed to Production.

This is the story about how a curious network engineer that knew very little about hardware was able to blow up a switch that was already in production and effect changes on the existing product line. Moreover, I was able to expose important operational issues that would have been a nightmare to support in the field.

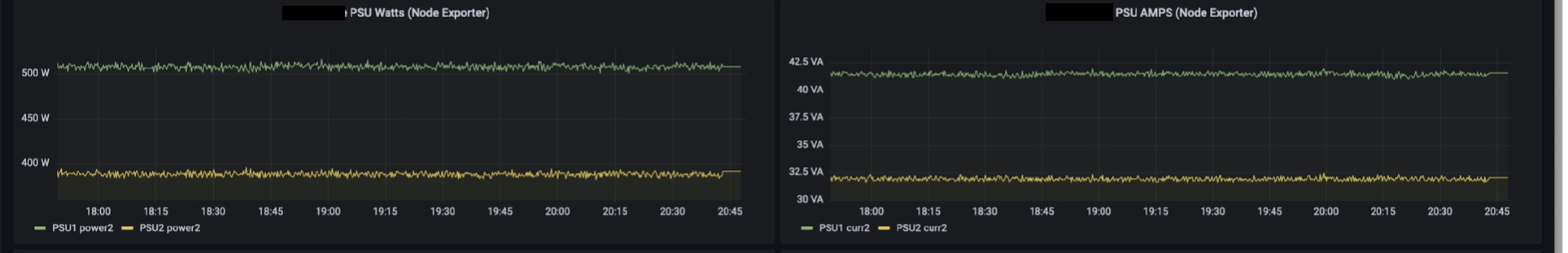

Observability is criticial when evaluating a system, be it a manual process, how hardware runs, or software is supposed to work. You must have tests and feedback loops so that you can look for patterns or inconsistencies that can help you find potential landmines before they blow up in your infrastructure. In the examples below we used Prometheus to export the data off the switch and send it to Grafana, so we could see the data in real time.

Power discrepancy was immediately apparent between PSU1 and PSU2. I’m just a network engineer but I’ve dabbled in building some of my own hardware, so I’m already thinking something is not right here.

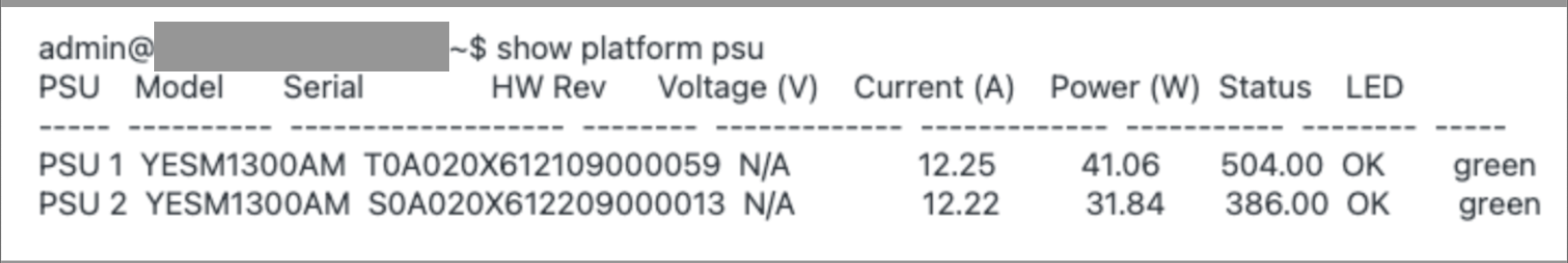

Jumping onto the switch and running the show commands verifies the huge delta between PSU1 / PSU2. This is NOT how power sharing is supposed to work! One important thing to note here is the model of the PSU1 & PSU2 is exactly the same but the serial numbers are different.

After the switch blew up and as part of the root cause analysis, the switch vendor tells us to NEVER mix the 2 DIFFERENT power supply vendors, even though the part numbers are the same. Apparently, one of the manufacturers forgot to calibrate their power supplies after they retooled them to change the airflow to meet production demands!

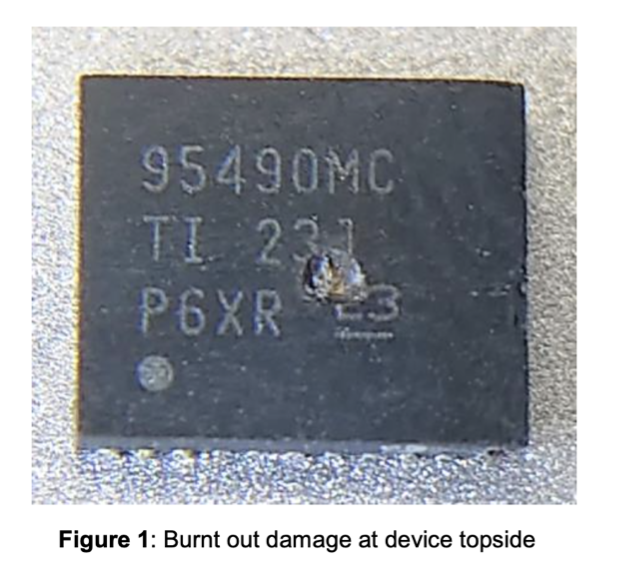

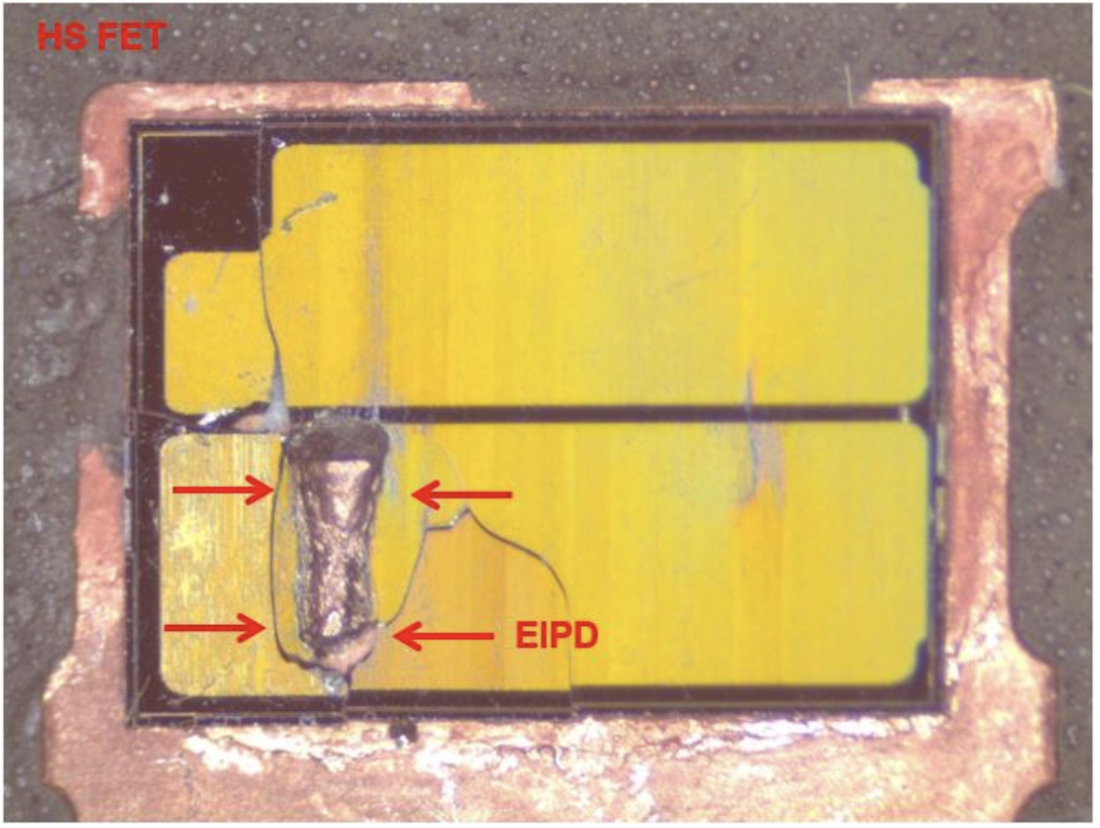

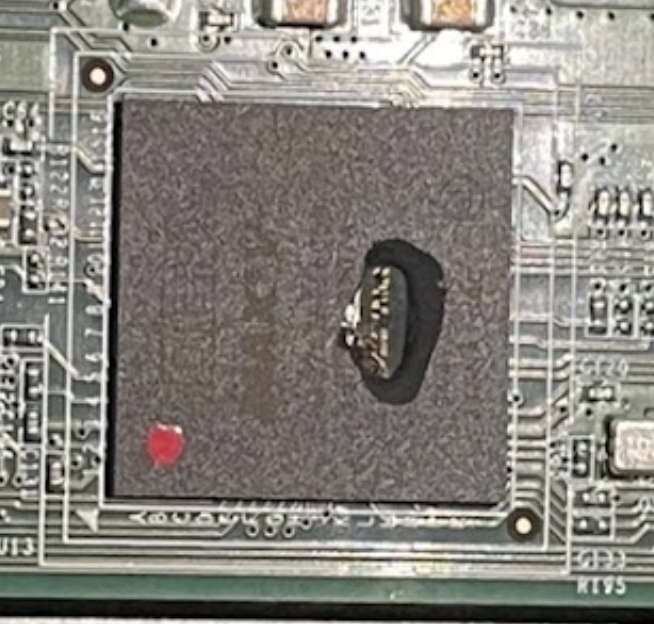

Here is the picture of ONE of the chips that melted. In addition to the switch blowing up, we also lost 16 out of the 32 400G optics. Apparently the chip that handles the 12V to 3.3V conversion did not protect downstream devices from this power event, it effectively cascaded through the switch as it was in the process of melting down!

3 Chips EOS on main board and 1 chip EOS on the fan board

Another issue that was uncovered during these tests were fluctuating power temps as can be seen here.

Apparently more quality control issues with the power supply factory, turns out there was an empty weld causing the problem with the power temp readings.

The main takeaway here is that observability, pattern recognition, curiosity and attention to details are all very important skills to develop.

Nobody told me to mix the power supplies as part of my test regime but I thought that it was a worthwhile test since they had the EXACT same part number and should have been interchangeable!

As a result of these findings, eBay was able to make sure that we only received the GOOD power supplies and communication was made to look out for this potential issue. Could you imagine these things hitting production and doing a simple power supply swap and then the Production switch blowing up!

Finally, as a result of these issues I helped to uncover, the switch has had it’s hardware and software upgraded to prevent this issue moving forward! They beefed up the power chips and added downstream protections to the optics that were fried in the process. Also the CEO of the company reached out to me to connect on LinkedIn 😉

Never stop asking questions or wondering why something is happening. Always build in feedback loops to ensure your systems are working as you expect them to. DON’T ASSUME THINGS, BE CURIOUS AND ASK QUESTIONS.